Vedant Puri

PhD Candidate, Carnegie Mellon University

Efficient Transformer Architectures | Scientific Machine Learning

I design transformer architectures with explicit attention to scaling and memory efficiency. My recent work, FLARE, enables million-token regimes on a single GPU. I implement new architectures directly in PyTorch and Triton. My background spans high-performance computing, numerical analysis, and computational fluid dynamics.

Research Interests

- Efficient attention architectures

- Numerical methods for ML and for PDEs

- Scientific machine learning

Featured Work

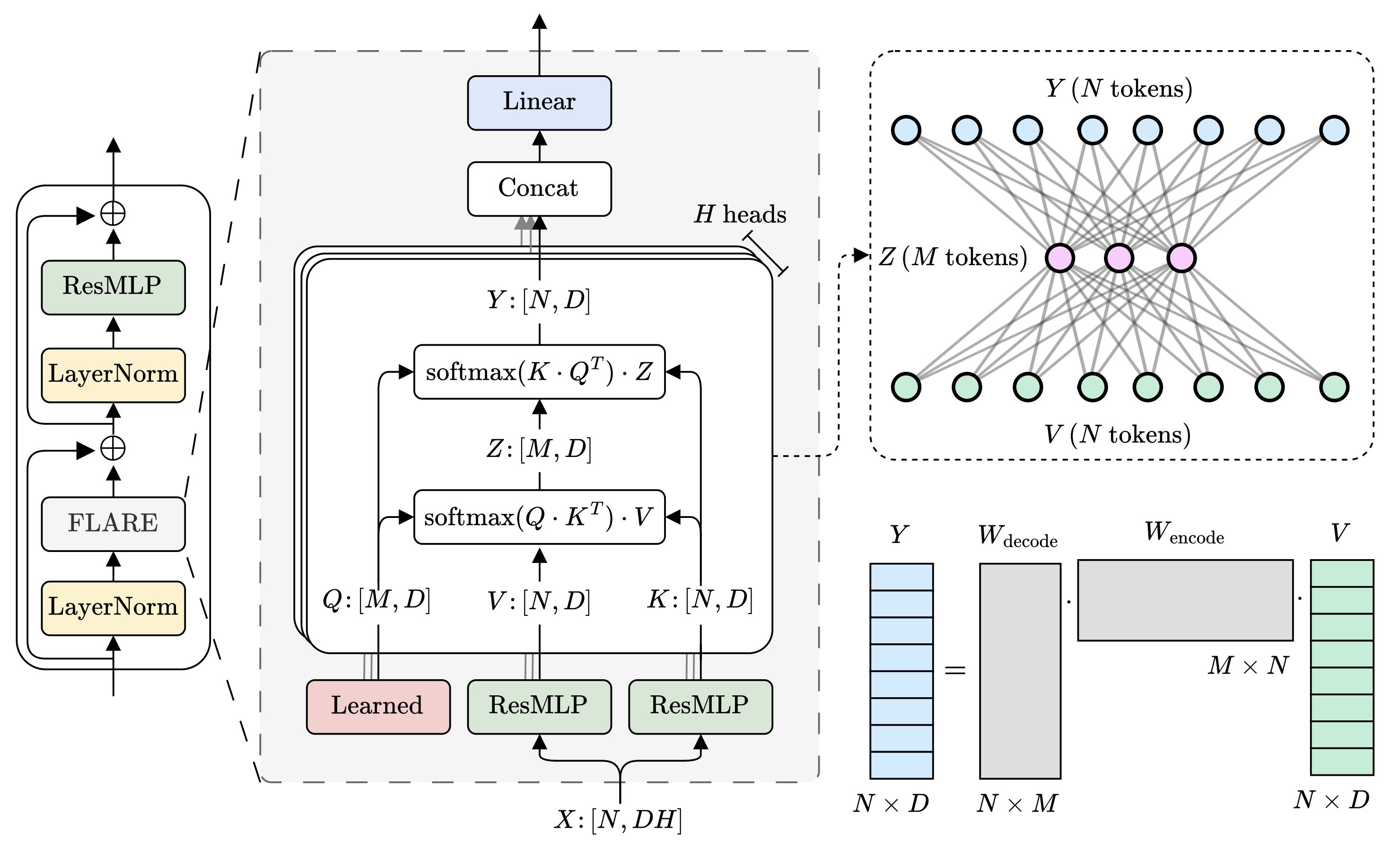

FLARE - Fast Low-rank Attention Routing Engine

- Derived a flexible low-rank reformulation of self-attention via latent routing.

- Reduced quadratic complexity of global communication in self-attention to linear complexity.

- Demonstrated scaling to 1M tokens on a single H100 GPU, attaining over 200x speedup over vanilla self-attention.

- Implemented attention modules in PyTorch and Triton with reproducible scaling experiments.

- Evaluated across PDE surrogate modeling, NLP, and vision benchmarks.

FLARE for Language Modeling (Ongoing dissertation work)

Decoder-only architectures | 2025–Present

- Extending FLARE to decoder-only next-token prediction with causal attention.

- Enabling adaptive attention state size to control memory and compute during training and inference.

- Implementing fused Triton kernels for causal training, prefill, and decode.

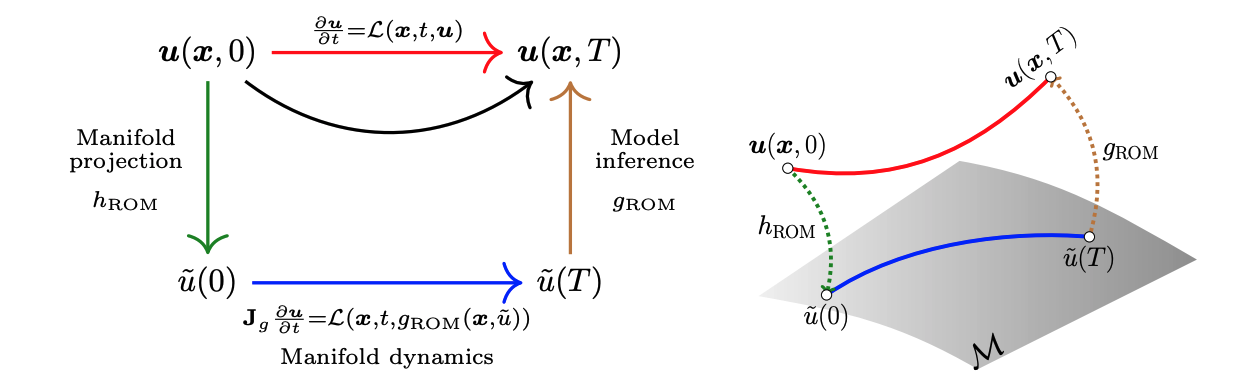

Hybrid equation-based + data-driven PDE modeling framework

- Introduced smooth neural fields as nonlinear spatial ansatz functions in equation-based reduced-order modeling.

- Retained physics-based Galerkin time evolution while learning expressive low-dimensional representations.

- Attained 200x speedup over full-order simulations in transport-dominated regimes.

Previous Work: Computational fluid dynamics on HPC systems

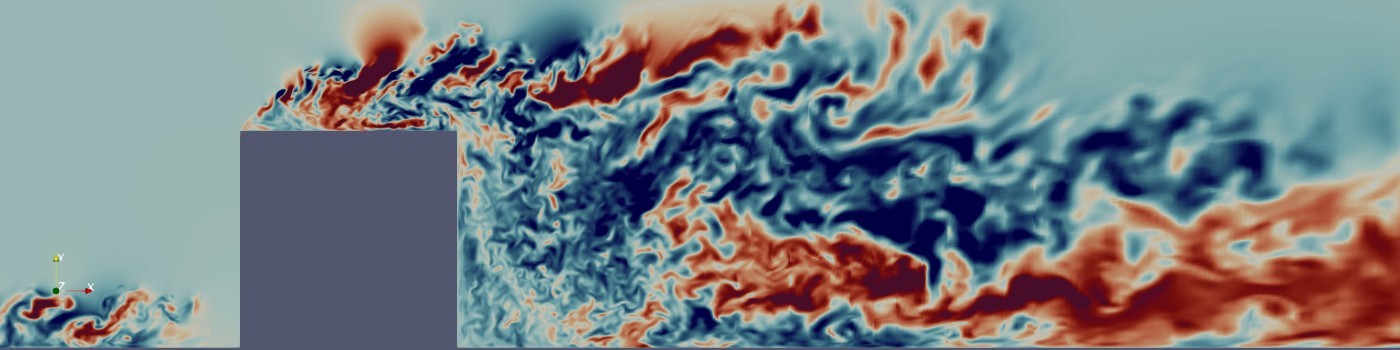

I previously worked on turbulence simulation and analysis workflows in high-performance computing settings, with emphasis on spectral element methods and large-scale post-processing. This background in numerical methods and PDE solvers informs how I design stable and efficient transformer architectures for scientific ML.

Velocity magnitude for flow past wall-mounted cube case at Reynolds Number 3900 with respect to cube height. Computation performed using spectral element code NEK5000 at Argonne Leadership Computing Facility.

Velocity magnitude for flow past wall-mounted cube case at Reynolds Number 3900 with respect to cube height. Computation performed using spectral element code NEK5000 at Argonne Leadership Computing Facility.

Not Work

Not So Up-to-Date Photography Portfolio

For the past decade, I have used a Canon DSLR as an excuse to walk around and photograph people, geometry, and city texture.

Hobbies

- Sports: squash, golf, crossfit

Open Source

Julia Open Source Tools

SciMLOperators.jl

Operator abstractions for SciML and PDE workflows

LinearSolve.jl

Linear solver interface for scientific machine learning

Below is a nonexhaustive list of Julia projects that I have contributed to.